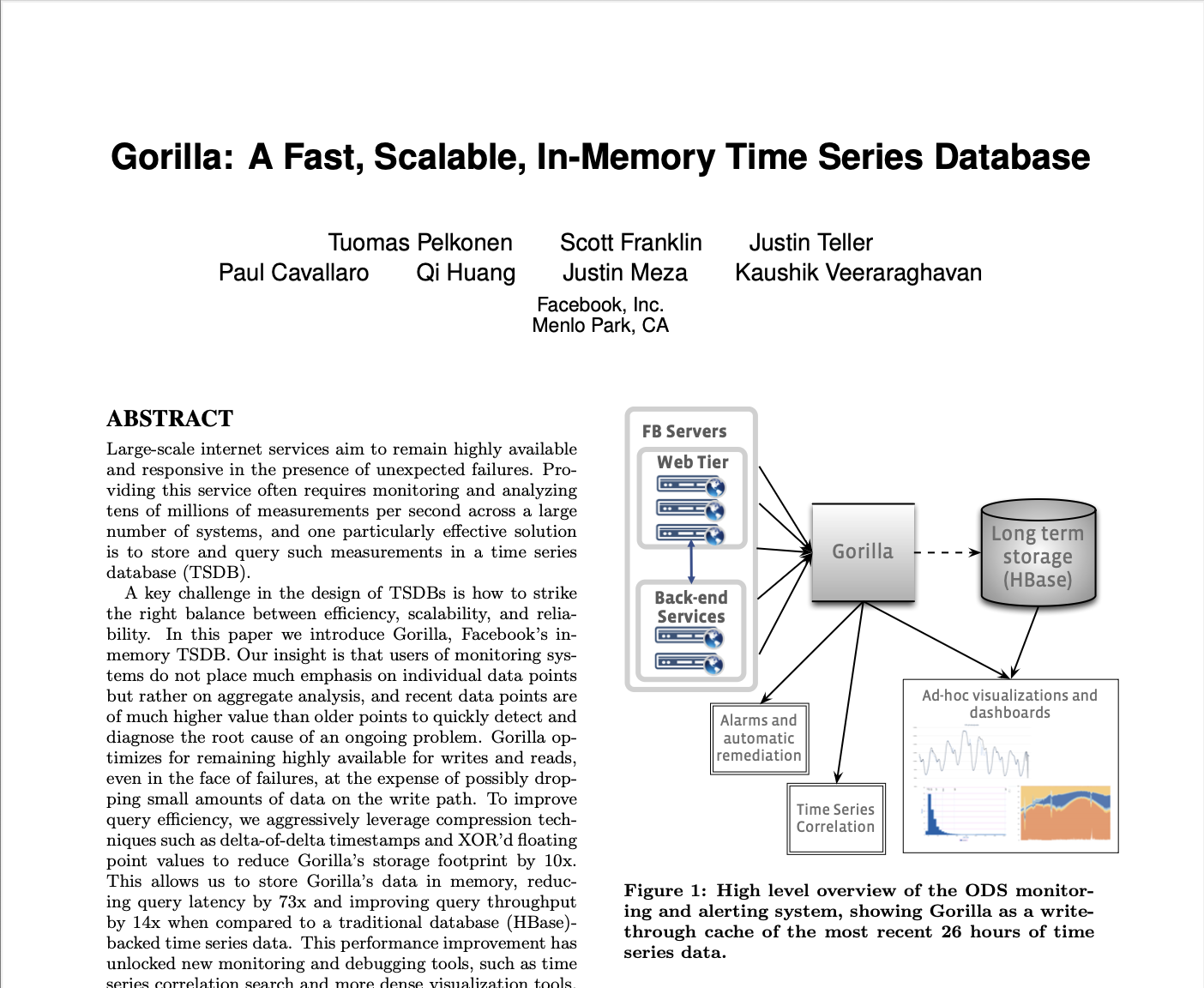

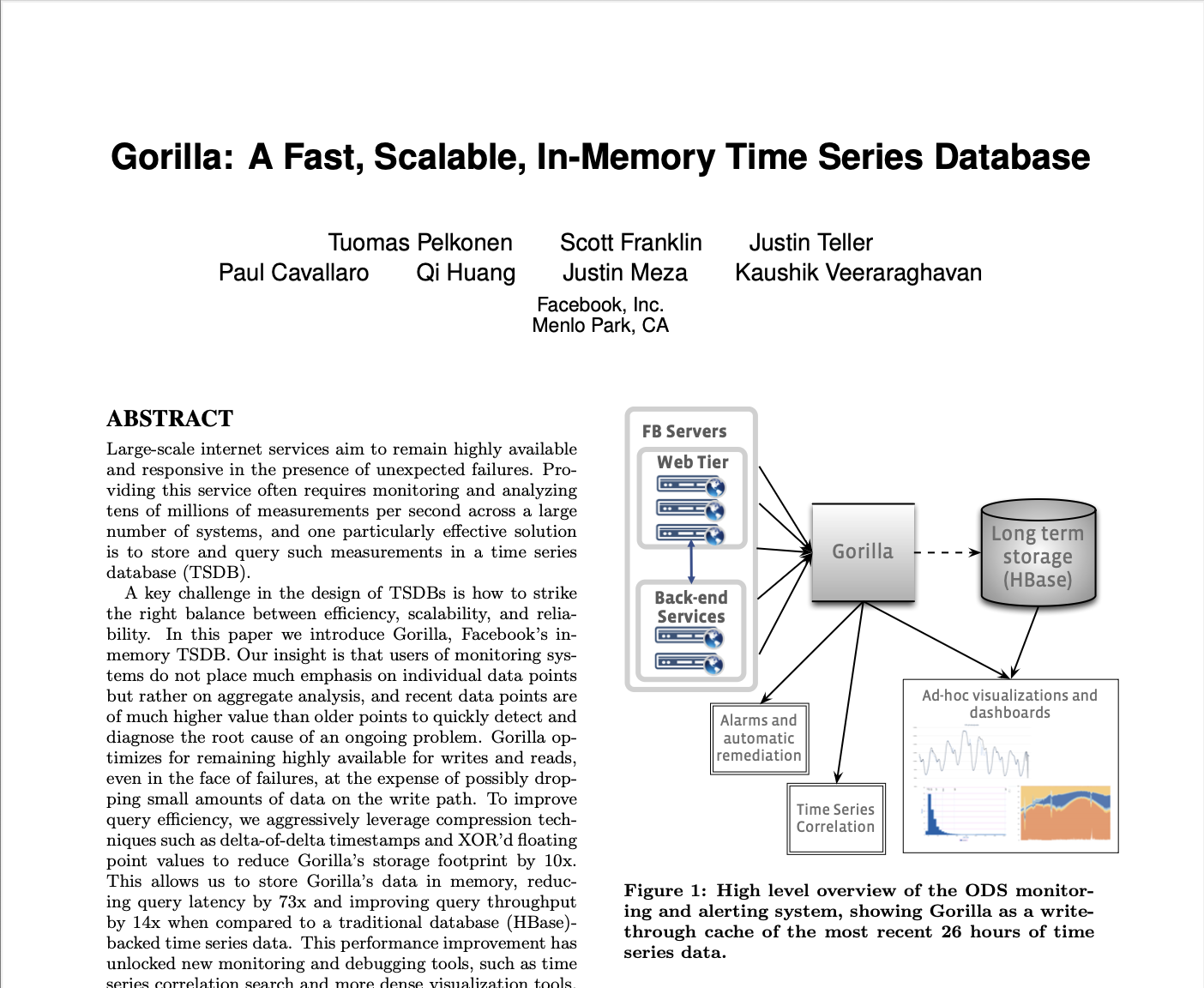

Time Series Database fundamentals

Machine Learning Algorithms, TensorFlow , Haskell, OCaml and Racket.

Snowflake is a network service for generating unique ID numbers at high scale with some simple guarantees. I am trying to code this in OCaml.

let n_4keys = List.mapi (fun j el -> if i = j then

List.nth keys (i - 1)

else el) keys in (* TODO Array is mutable and needed here*)

This is the second part that shows the OCaml port of F# code that implements a stack-based Virtual Machine. The functors turned out to be very different from the structure of F# code.

Notwithstanding the loftiness of the title, this is just a tutorial inspired by Implementing a JIT Compiled Language with Haskell and LLVM. I chose OCaml and decided to select my own libraries to accomplish this. So, for example, the parser using Angstrom, which I know nothing about.

An attempt to implement the Raft distributed protocol using OCaml 5 Eio, Effect Handlers, and capnp-rpc-lwt, which is a RPC library based on Eio . Eio allows integration of Lwt framework.

Collaborative editor patterns that I try to code could be of many kinds. There are string manipulation algorithms, CRDTs and even LLM inference. So even though a full-fledged editor is not in scope here, many algorithms like these can be explored and a functional editor can be created.

I know of a few methods to learn Functional Programming languages. One could read a book or read source code. My attempt involves source code that I port to my favorite language. And I learn two different languages at the same time. The source and the target. It has been very productive for me.

I am reading about Semantics of Programming Languages and here is an attempt to code Racket to parse and interpret toy languages. More details will be added. The code here is not the final version but it compiles. It shows the progress made as I learn the nuances of Racket and types.

Whenever I code Data structures like BTree,LSM etc. in OCaml I read SML,Rust or C++ code. The code I read is in a repo. that implements a caching layer or DBs like https://rethinkdb.com. I don’t find any direct OCaml references. So I ported this Haskell two-three tree by http://matthew.brecknell.net/post/btree-gadt/…. Moreover since I am learning it is hard to identify succinct functional code as some examples seem to be imperative.

This is my attempt to code a Ray Tracer in Racket. Details are forthcoming.

I hope to add details as I understand this field better. I may also use multiple languages to code parts of algorithms.

Yet another attempt to closely replicate the popular PyTorch code of https://karpathy.ai/zero-to-hero.html close on the heels of the exploration of basic https://mohanr.github.io/Exploring-Transformer-Architecture/.

I set about learning the Transformer Architecture from first principles. Before long I realized I need to read many Arxiv research papers starting with ‘Attention is All you need’. But there are other older foundational papers dealing with this.

I started dabbling in Programs and proofs recently by using Spacemacs and its Coq layer. Since I didn’t study this subject before I start by setting up and using the tools.

As part of my effort to engage with the Open-source community I started answering Stackoverflow questions. I started by asking a few decent questions and then answered some of my own questions and accepted them. Gradually I understood that this community site is not only about answering but also asking good questions that others can answer.

As part of the lecture series this year Yoshua Bengio spoke about Deep Learning research in Chennai. I will

add more details gradually as the topics that he covered have to be researched.

As part of the lecture series this year Yoshua Bengio spoke about Deep Learning research in Chennai. I will

add more details gradually as the topics that he covered have to be researched.

At this time this article is quite unwieldy and contains hundreds of lines of code without proper explanation. It has to be split up , edited and published as another series. Moreover I learnt more about how to code a functional language by consulting some experts. I will edit it in due course.

In the second part I explain some more language features and code.

Many programming problems lend themselves easily to solutions based on Functional Programming languages. It is not hard to convince ourselves of this after coding a Language like OCaml or Haskell.